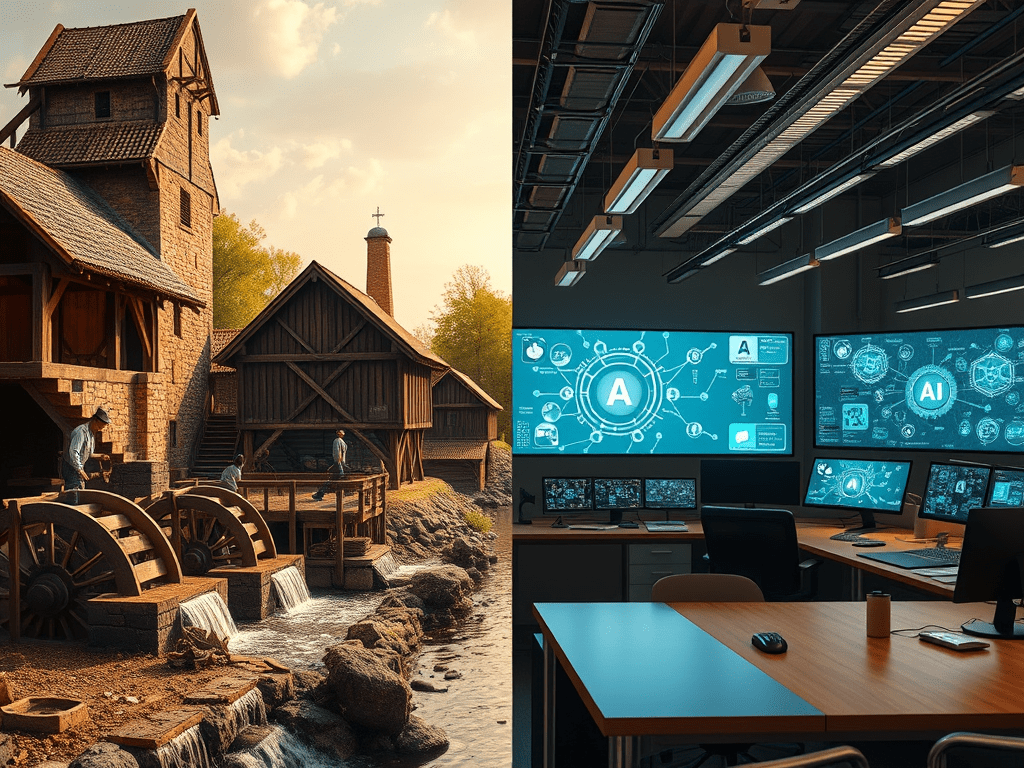

We’ve been automating labor for millennia—but only now can we skip understanding completely.

Millwrights in ancient Persia and medieval Europe captured the steady push of wind and water to grind grain and saw lumber—turning the brute labor of entire villages into the quiet arc of a wheel. Coal and steam amplified that leverage; the Industrial Revolution replaced muscle with piston, letting factories hum day and night. Petroleum engines shrank the factory into something that could move, and widespread electrification in the early 20th century laced power into every household gadget.

Each energy shift made the hard things easier, but never removed the need to understand the mechanism. A miller could still hear the stone’s pitch to know when grain was burning; an engineer could still trace a steam leak by sight and sound.

Then came the computer, and the locus of change moved from steel to thought. The graphical operating system put the command line behind icons; office suites like Lotus 1‑2‑3, QuickBooks, and the friendly—if infamous—Clippy made text processing and accounting tasks accessible to anyone who could point and click. The Internet rewired those isolated screens into a global lattice, and social media layered algorithms on top, shifting from analyzing traffic to directing it.

Now generative AI takes the next leap: give it a task—summarize this contract, build me a workflow, draft me a marketing plan—and it supplies the how. For the first time, a tool can perform skilled work without requiring the operator to understand the underlying skill. That difference is both miraculous and perilous.

Progress has always magnified mistakes—assembly lines can stamp a thousand flawed parts, power grids can propagate a single mis-configured relay—but AI magnifies them at the speed of conversation. It can optimize infrastructure, streamline manufacturing, or escalate conflict before a human even reviews the change.

The question is no longer whether faster is better. It is whether faster without understanding is progress at all—because the moment the environment shifts, the miracle can become an untraceable failure.

Recommended Listening:

The Siren Song of Effortless Creation

Low-code dashboards and AI prompt bars feel like a magic lamp: rub once and out comes a workflow, a data model, or a marketing blurb. The interface is so friendly that success on the first run feels like proof you suddenly understand integration, throttling, and OAuth tokens. In reality the work has only been displaced, not dissolved. Retry logic still executes, permission scopes still matter—the difference is that the complexity now sits under tinted glass.

Consider the analyst who strings together a SharePoint trigger, an Excel action, and a Copilot-written formula in under an hour. When quarterly volume doubles and the flow silently times out, she is surprised; the platform never mentioned service limits, and Copilot never taught her to log retries. What looked like mastery was only a successful guess. And when that guess fails—who’s left to fix what was never fully understood?

These tools make things look easier than they are. It’s not malicious—it’s frictionless by design. But friction, in small doses, teaches. When we bypass the learning curve entirely, we sacrifice resilience for speed. It’s the digital equivalent of replacing manual locks with an auto-locking system you can’t override: convenient until the battery dies and you’re locked out with no key.

Fault Lines Beneath the Paint

Business landscapes shift as surely as tectonic plates: a merger swaps CRMs, compliance adds audit checkpoints, leadership decides that every notification must leap from email to Slack. In code you do not understand, each nudge lands like an aftershock.

The once-trusted workflow becomes a puzzle box. Extending it means reverse-engineering auto-generated expressions; troubleshooting becomes a treasure hunt through half-finished error messages. Ownership dissolves as quickly as interest: IT calls it shadow IT, the citizen developer moves on to new challenges, and the help-desk ticket pinballs between teams.

These fault lines reveal a deeper issue: no single team truly owns the solution. The business depends on it, IT doesn’t trust it, and the original author can’t fully explain it. Documentation is either nonexistent or buried in a private email thread. And when it breaks, the entire organization feels like it’s chasing smoke.

The brittleness isn’t just technical—it’s cultural. Without shared ownership and shared understanding, even small changes feel like high-stakes surgery.

Understanding as the Last Safety Net

Knowing how something works is unfashionable only until it fails. A mental model—however rough—buys three kinds of insurance:

- Foresight. Recognize the early tremor of a quota breach because you know tokens expire and APIs throttle.

- Flexibility. Refactor rather than rebuild when a column is renamed or a regional data residency rule appears.

- Memory. Explain the system to the next caretaker without resorting to “just trust the platform.”

The spare tire feels like unnecessary weight—until you blow a wheel on a deserted road.

The best builders keep mental blueprints, even for tools designed to hide the wires. They don’t memorize every endpoint, but they know where the risks live. They design with drift in mind. They don’t panic at the first failure because they’ve already gamed out what could go wrong—and they’ve left signposts for the next person.

Understanding doesn’t mean memorizing every setting. It means cultivating the instincts to know where to look, who to ask, and how to reason through a blackout. It is the opposite of dependency. It is quiet competence—and in a crisis, it’s the difference between recovery and collapse.

Building for Tomorrow

Sustainable citizen development does not require stifling the joy of fast creation; it requires pairing that joy with a handful of professional habits:

Work in Pairs. Match each business creator with an IT guide. One brings requirements, the other guardrails. The duo designs flows that survive both audit season and vacation schedules.

Save the Blueprint. Even drag-and-drop diagrams export to JSON. Store those snapshots in Git or a versioned library so that rollback is measured in minutes, not memory.

Test on Purpose. Spinning up a sandbox environment costs less than repairing production reputations. Promote solutions through Dev, Test, and Prod so that “it worked on my screen” stops at the door.

Let the System Talk. Add verbose logs to a table, wire alerts to Teams, surface metrics on a dashboard. Silence is comforting until it masks a failure.

Review the Robot’s Work. Treat AI like an eager intern—bright, fast, occasionally reckless. Great to have on your team, dangerous to leave unsupervised. Read every generated formula, prompt chain, or SQL statement before it ships.

Write Living Notes. Keep a lightweight document that names the purpose, the inputs, the failure modes, and, most of all, the owner. The moment that owner changes jobs, the notes earn back every minute they took to write.

Write It Down or Watch It Burn

Ask any veteran operations engineer for a horror story and you will get a version of the same plot: a critical workflow breaks the night after its author leaves, and the rescue team spends dawn reverse-engineering a stranger’s half-remembered logic. The cost of those all-hands war rooms dwarfs the time it would have taken to write a single README.

Documentation is not a bureaucratic afterthought; it is the schematic that lets future electricians trace the wiring without tearing down the walls. Treat it the way modern software teams treat code:

Keep it close to the source. Store JSON exports, prompt templates, and architecture diagrams right beside the solution in Git. A doc that travels with the code survives migrations and mergers.

Version it. README v1 should reflect Workflow v1. When the trigger changes, so should the docs. Git history provides the time machine.

Review it. If a pull request lacks an explanation of why a change was made, send it back. Five extra minutes now prevents five hours of archaeology later.

Automate the boilerplate. Let Copilot or a doc-as-code generator draft the skeleton—purpose, inputs, outputs. Then fill in the judgment, the edge cases, the hard-won insights no AI can guess.

Good documentation trades minutes of writer effort for hours of reader relief. More important, it models a mindset: this work matters enough to explain.

Cultivating Curiosity

Tools can seduce people into passive consumption, but a culture of curiosity turns every screen into a doorway. Organizations that thrive on AI and low-code do not merely permit questions—they fund them.

Start small. Host fifteen-minute “show me” corners at the end of sprint reviews where a builder dissects one flow: Here’s the trigger, here’s the quota, here’s how I know it fails gracefully. Questions are mandatory; criticism is blameless.

Run quarterly “failure festivals.” Invite teams to present a near-miss or outage, focusing less on the bug and more on the blind spot that hid it. Record the session, tag it, and let newcomers binge the back catalogue of lessons you paid for once already.

Appoint mentors, not gatekeepers. An IT sponsor who helps a citizen developer instrument logging will later inherit a system that already speaks their language. Curiosity compounds when the helper tomorrow is the student today.

Finally, teach the workforce to interrogate the machine. Prompt Copilot with “Explain your plan” before you click Run. Ask the LLM to translate its own expression into plain English. Curiosity, applied to AI, turns a black box into a glass one.

In teams where inquiry is celebrated, understanding ceases to be a heroic act. It becomes the ordinary way work gets done—and that ordinariness is the strongest safeguard of all.

Blueprints for the Next Revolution

Waterwheels did not disappear when steam engines roared in; they shifted upstream and kept grinding grain where rivers still ran. Steam yielded to internal combustion, which yielded to electric motors, yet each newcomer inherited pipes, rails, and conductors laid by the last. Progress sticks when blueprints survive.

Generative AI is the first tool that builds itself as it builds for us. Quantum processors, self-healing networks, and autonomous agents are already waiting in the wings. The next leap will arrive long before we finish applauding this one.

So the modern professional’s mandate is clear: leave footprints wide enough for the next generation to follow. Treat every Copilot flow, every LLM-drafted routine, as heritage infrastructure. Version the prompt alongside the code. Sketch the architecture even if the boxes are virtual. Record “why” with the same respect we record “what.”

Do that, and tomorrow’s engineers will not have to rip out the foundation to add a story. They will unroll the user manual, trace the lineage of each module, and bolt on the breakthrough we cannot yet imagine.

Velocity is not the enemy, amnesia is. Build fast—but leave the breadcrumbs.

Leave a comment